All You Need to Know About Cybersecurity Gap Analysis

Imagine trying to secure a house by installing the most expensive smart locks on the front door, while leaving the back window unlatched and the basement door wide open. You’ve spent the money, but are you actually safer?

A cybersecurity gap assessment is essentially a “home security audit” for your digital estate. It looks past the flashy tools to find the forgotten windows and weak hinges in your security policies, existing controls, and security controls across people, process, and technology.

And that’s not theoretical: Verizon’s 2024 DBIR reports that 68% of breaches involve the human element, which is exactly where “unlatched windows” tend to hide. Today, we’ll explore how this process helps you identify vulnerabilities, meet regulatory requirements and compliance requirements, and improve security maturity without wasting effort.

What is a Cybersecurity Gap Analysis?

A cybersecurity gap analysis, sometimes called an IT security gap analysis, is a structured assessment that compares an organization’s current security posture to a desired target state (such as a standard, framework, policy, or risk goal). The purpose is to find missing controls, weak processes, and compliance gaps, then prioritize actions to close those gaps across information security.

What it Compares

A gap analysis typically measures the difference between:

- Current state: What controls, policies, tools, and practices you have today, including how they fit your technology stack

- Target state: What you should have to meet a requirement (example: NIST CSF, ISO 27001, CIS Controls, HIPAA, PCI DSS)

What it Evaluates

It often looks across areas like:

- Governance, risk, and compliance (GRC)

- Asset management and inventory

- Identity and access management (IAM)

- Network and endpoint security

- Vulnerability management

- Logging, monitoring, and detection

- Incident response readiness

- Data protection and encryption

- Third-party and supply chain risk

- Security awareness and training

- Validation activities such as penetration testing, especially against evolving threats

Outputs you usually get

A good cybersecurity gap analysis produces:

- A list of gaps mapped to requirements

- Risk ratings for each gap (impact and likelihood)

- A remediation roadmap with priorities, owners, and timelines

- Sometimes a maturity score (example: 1–5) for each security domain

Simple example

If a framework requires multi-factor authentication (MFA) on all privileged accounts, but your environment only has MFA on email, the gap analysis flags:

- Gap: MFA not applied to privileged accounts

- Risk: High (account takeover impact)

- Fix: Enforce MFA for admin roles, implement conditional access, validate coverage

Difference Between a Gap Analysis and a Risk Assessment

Gap analysis and risk assessment often get grouped together, but they answer different questions and produce different outputs. Here’s a table that clearly shows the difference between a gap analysis and a risk assessment:

Gap Analysis vs Risk Assessment Table

| Category | Gap Analysis | Risk Assessment |

|---|---|---|

| Primary goal | Measure where your current security posture falls short of a target standard or requirement | Measure what can realistically harm the organization and how serious it would be |

| Focus | Control coverage, maturity, compliance readiness | Threats, vulnerabilities, likelihood, impact, and residual risk |

| Typical inputs | Policies, procedures, control evidence, audit artifacts, framework checklists | Asset inventory, threat modeling, vulnerability data, incident history, business impact analysis |

| Typical output | Gap list, maturity scores, remediation roadmap mapped to framework requirements | Risk register, risk ratings, treatment plan (reduce/accept/transfer/avoid), prioritized risk actions |

| Best use case | Preparing for audits, building baseline controls, assessing compliance readiness | Prioritizing security spend and effort based on real-world business risk |

| Example (No MFA for admins) | “MFA is required under our framework. This is a gap.” | “Admin takeover risk is high impact. Likelihood depends on exposure and controls. Fix is urgent.” |

| When to run it | During audits, program buildout, annual control reviews | Quarterly or annually, after major changes, after incidents, or when threat activity shifts |

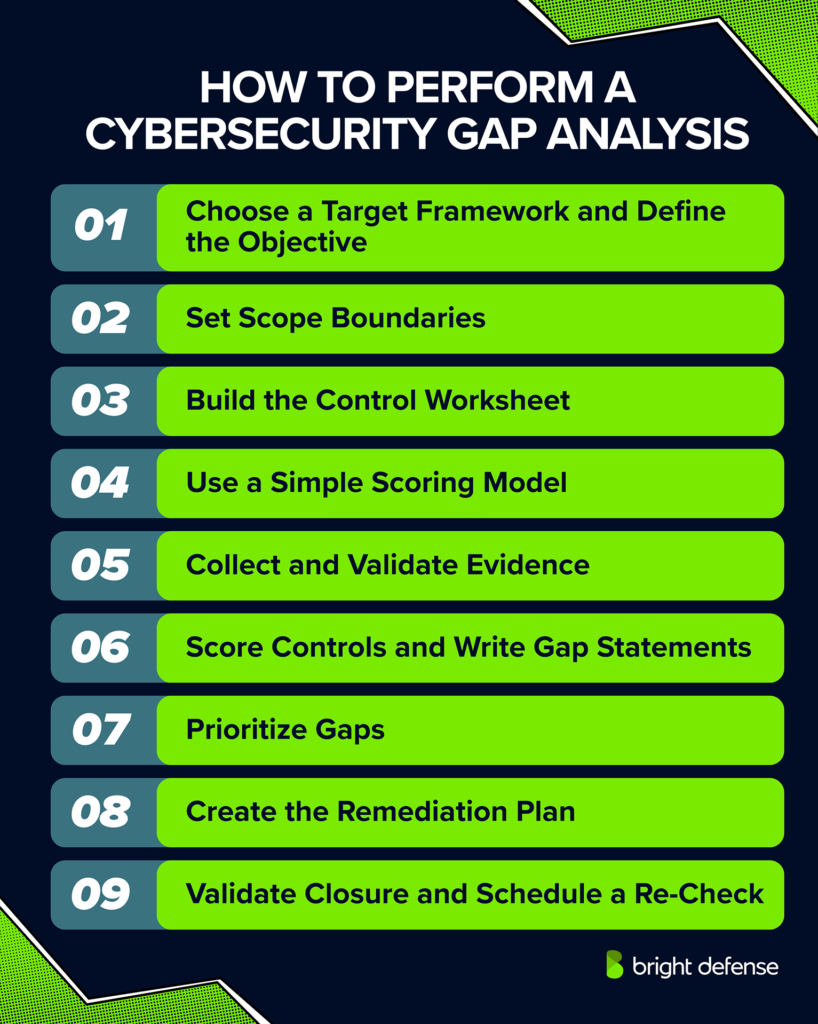

How To Perform a Successful Cybersecurity Gap Analysis (9-Step Method)

A gap analysis becomes useful when it gives you a defensible view of your current security posture, shows exactly what is missing, and turns those findings into a prioritized action plan. The steps below are practical for real environments, whether you’re preparing for an audit, reducing risk fast, or building a security program.

1) Choose 1 Target Framework and Define the Objective

Start with a single benchmark, not a mix. A gap analysis works best when it has a clear reference point such as NIST CSF 2.0, CIS Controls v8, ISO/IEC 27001:2022, PCI DSS, or your internal standard. Write a short objective statement that explains why you are doing the analysis and what decisions it should support, especially as evolving threats and insider threats continue to shift the risk picture.

Good objective examples:

- “Assess our environment against CIS IG1 and produce a 6-month remediation plan.”

- “Evaluate our controls against ISO 27001:2022 to prepare for certification readiness.”

- “Map our current controls to NIST CSF 2.0 and define a target profile for leadership.”

This small step prevents scope creep and reduces disagreement later.

2) Set Scope Boundaries

Scope determines what your results actually mean. Document what is included and excluded so stakeholders understand where the assessment applies. Also define an evidence window so scoring is consistent across teams. This is also where you confirm which systems contain customer data and sensitive data and which teams own access management responsibilities, since those usually drive the highest risk exposure and can quickly impact customer trust.

Include:

- Business units, regions, and subsidiaries

- Cloud accounts, on-prem environments, and key SaaS apps

- Critical systems and sensitive data types

Exclude:

- Systems scheduled for retirement

- Assets fully owned and operated outside your control

Evidence window examples:

- “Last 90 days of logs”

- “Current configurations as of assessment date”

- “Training records for the last 12 months”

If you skip this, people will assume your findings apply everywhere, even when they don’t.

3) Build Your Control Worksheet

Your worksheet is the main deliverable, so treat it like a live operational tool, not a one-time report. Each row should represent one control, requirement, or outcome. Your worksheet should also show which security controls already exist, which ones are missing, and how those map to compliance gaps against your chosen baseline, including any compliance requirements tied to audit objectives.

Minimum columns I recommend:

- Control ID and short requirement statement

- Applicability (Yes/No) with rationale

- Current score (0 to 3)

- Evidence links (documents, screenshots, exports, tickets)

- Gap statement (what’s missing and where)

- Owner (single accountable person)

- Priority (High/Medium/Low)

- Remediation plan and target date

This format becomes your gap register and your remediation tracker in the same place.

4) Use a Simple Scoring Model

A scoring model keeps discussions grounded and prevents subjective ratings. Use a simple 0 to 3 scale that separates paper controls from operational effectiveness.

Recommended scoring:

- 0: Not in place

- 1: Documented only (policy exists, no operational proof)

- 2: Implemented (operational, some proof exists)

- 3: Effective (tested, measured, consistently enforced)

A common scoring mistake is giving “2” or “3” based on intent instead of proof. The score should reflect what works today.

5) Collect Evidence and Validate Key Controls

Evidence separates a real gap analysis from a self-attestation. Gather evidence for each control, then validate high-risk controls with technical checks instead of assumptions, especially where your organization may be organization vulnerable due to coverage gaps.

Evidence examples:

- Policies and standards (approved and current)

- Screenshots of enforced settings (MFA, device compliance, password policies)

- Configuration exports (IdP rules, firewall rules, EDR policy settings)

- Logs and SIEM ingestion proof

- Patch compliance reports and vulnerability scans

- Incident response artifacts (plan, tabletop results, post-incident reviews)

- Backup reports and restore test results

Key validations worth doing:

- Confirm privileged MFA enforcement across all admin roles

- Verify EDR coverage, tamper protection, and alert generation

- Test at least 1 restore for Tier 1 systems

- Check logging coverage for key systems (IdP, endpoints, cloud control plane)

- Run targeted checks for social engineering risk in support processes

If you can’t prove it, it doesn’t earn a high score.

6) Score Each Control and Write Gap Statements

This is where you convert evidence into action. Score each control and write gap statements that are clear and specific. Avoid vague language like “needs improvement.”

A strong gap statement includes:

- What the requirement expects

- What exists today

- What’s missing

- Why it matters (risk or compliance impact)

Gap statement example:

“MFA is enforced for email and VPN, but not for cloud admin roles. Privileged accounts remain exposed to credential compromise, increasing risk of full tenant takeover.”

Clear gap statements reduce friction with engineering teams because they spell out exactly what needs to change.

7) Prioritize Gaps

Not every gap is urgent. Prioritize using business impact, likelihood, and obligations such as contractual requirements, especially where specific industry requirements apply. Add a timeline so the plan becomes executable.

Priority factors:

- Likely business impact (data loss, downtime, fraud)

- Likelihood of exploitation (exposure, known attack patterns)

- Regulatory or client deadlines

- Dependency chain (what must happen first)

Practical timeline buckets:

- 0 to 90 days: critical risk reducers (MFA, logging gaps, exposed assets)

- 3 to 6 months: foundational improvements (patch SLAs, segmentation, IR exercises)

- 6 to 12 months: maturity improvements (advanced detections, automation, deeper governance)

If everything is “high,” nothing will be acted on.

8) Create the Remediation Plan

A gap analysis is only valuable if it leads to execution. For each high priority gap, define exactly what will be delivered, who owns it, and how success is measured. This is where you often need expert guidance to keep the plan realistic and tied to business outcomes.

For each gap, document:

- Owner (1 accountable person)

- Deliverable (specific change)

- Dependencies (what must be done first)

- Timeline (start, milestone, completion)

- Success metric (coverage %, SLA, test pass result)

Examples of measurable deliverables:

- “Enforce MFA for all privileged roles across all tenants, including break-glass accounts.”

- “Reach 98% EDR coverage with tamper protection enabled.”

- “Patch critical vulnerabilities within 14 days, exceptions tracked and approved.”

This turns your worksheet into a real security roadmap with a repeatable, structured approach.

9) Validate Closure and Schedule a Re-Check

A gap closes when there is proof, not when someone says it’s done. Require updated evidence for closure, then set a cadence for re-checks because control drift is constant. This step also keeps your security maturity goals realistic since the control environment changes as the business changes, including new third party vendors and major platform shifts.

Closure proof examples:

- Screenshot of policy enforcement

- Audit logs showing coverage

- Test results (restore tests, detection tests)

- Completed tabletop exercise documentation

Suggested cadence:

- Quarterly for fast-moving environments

- Biannual for stable environments

- Re-run after major changes (cloud migrations, mergers, new vendors, new identity platform)

This prevents security regression and keeps your gap analysis from becoming stale, while keeping a clear picture of what still needs attention.

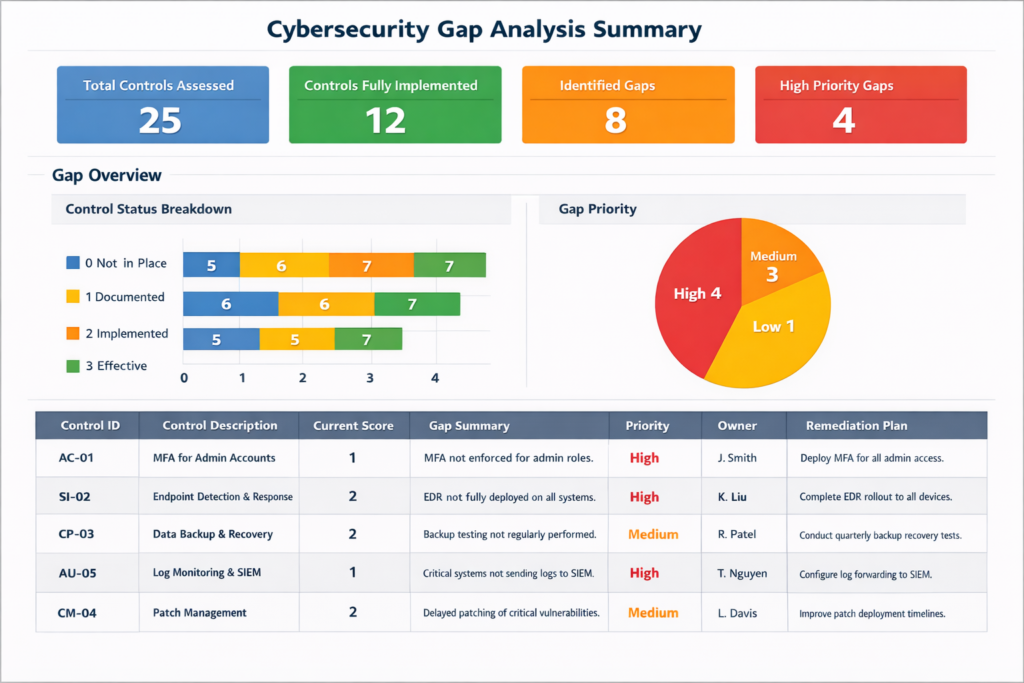

Below is a sample Cybersecurity Gap Analysis report for your reference and review:

How Bright Defense Can Help

At Bright Defense, we help you understand where your security program stands today and what needs to change to meet your risk and compliance goals. Our team conducts structured cybersecurity gap analyses that map your current controls against your chosen framework, then turns the findings into a practical remediation plan your team can execute.

We focus on clear priorities, measurable progress, and audit-ready evidence. Whether you need SOC 2 readiness, ISO 27001 support, HIPAA alignment, or a broader security posture review, we help you close gaps faster and build a program that holds up under real-world pressure.

FAQs

Cybersecurity’s real gap is execution. Most organizations know what to do, but can’t sustain the basics at scale every day: identity control, fast patching, accurate asset inventory, and vendor risk governance. The numbers tell the story: identity attacks hit 600M/day, only ~54% of known vulnerabilities get fully remediated (median 32 days), third parties drive 30% of breaches, and the workforce gap is 4.8M. When those basics slip, cyber security defenses fail under real-world operational load.

An ISO 27001 gap analysis is a pre-audit assessment that checks your current ISMS against ISO/IEC 27001 Clauses 4–10 and your Annex A controls plus Statement of Applicability (SoA) to show what is missing, weak, or undocumented before certification.

It also tests whether your security framework and supporting regulatory frameworks are actually implemented as intended, not just documented. This is often where hidden security flaws surface, especially in evidence quality and control ownership.

It outputs a gap matrix with evidence, a gap register with owners and due dates, and updated risk treatment + SoA, plus a short audit readiness plan. In ISO/IEC 27001:2022, Annex A has 93 controls, and the transition from 2013 ended Oct 31, 2025.

Most sources group gap analysis into these 4 types:

1. Compliance Gap Analysis

Compares current controls against required standards or regulations to identify compliance gaps.

2. Risk-Based Gap Analysis

Identifies gaps between existing controls and the organization’s actual risk exposure, prioritizing high-impact risks.

3. Control Maturity Gap Analysis

Evaluates how well security controls are designed, implemented, and operating versus a target maturity level.

4. Capability (Program) Gap Analysis

Assesses gaps in overall security capabilities across people, process, and technology.

A SOC 2 gap analysis is a readiness assessment that compares your current controls, processes, and evidence against the AICPA Trust Services Criteria (TSC) and the SOC 2 system description criteria to show what must be fixed before a CPA can issue a SOC 2 report. It also helps clarify your organization’s security posture in auditor-friendly terms.

It typically outputs a control-to-criteria matrix, a gap list with owners and due dates, and a draft or updated system description and control narratives. It is not a SOC 2 report and includes no attestation opinion.

Yes, ChatGPT can support a gap analysis if you provide the scope, control set, and real evidence, but it cannot act as a credible standalone auditor because it cannot independently verify configurations, observe operations, or validate evidence authenticity.

If inputs are incomplete, it can still help you document what makes an organization vulnerable, but you should validate the results with a qualified security lead.

– Best use: build checklists and evidence requests, review the documents you share, and draft outputs such as a gap register, remediation plan, control narratives, and audit readiness plan.

– Limits: it cannot confirm controls work in production or replace a certification audit, and you must verify results with a qualified security lead.

Get In Touch